Three leading researchers at the Warren B. Nelms institute for the Connected World are using artificial intelligence (AI) to make the Internet of Things (IoT) more secure and more efficient. They have invited us into their laboratories to take a peek at the leading edge of AI applications.

Sandip Ray: Application of AI in Automotive Security

Autonomous, self-driving vehicles are coming. Already there are a large number of autonomous features in today’s vehicles, and each new variant progressively increases autonomy, augmenting and often replacing many functions of human operators. Autonomous features have the potential to make a transformative impact on the way we travel, enable efficient utilization of our transportation infrastructure, and improve safety by reducing and eventually eliminating human errors.

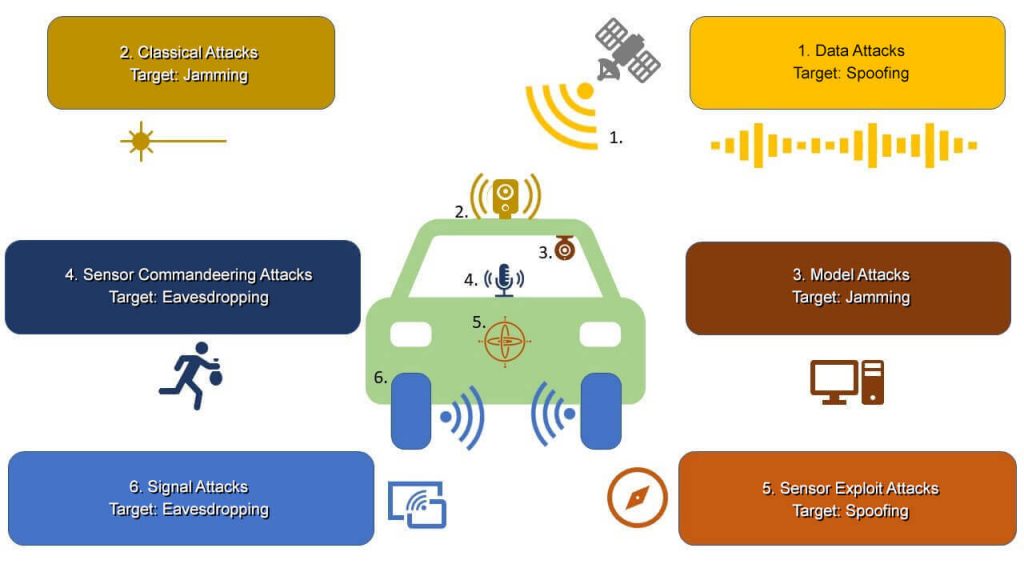

A modern vehicle is a very complex distributed system of electronic control units, sensors, and actuators that coordinate to perceive the environment and make driving decisions and actuations in real time. While advances in autonomy allow a vehicle to perform at higher efficiency, they also come at a cost. One drawback is that vehicles become highly vulnerable to cyber-attacks, the same way a computer is, especially the more it is equipped with a plethora of electronics and software applications. An adversary attacking a vehicle can easily cause catastrophic accidents and bring down the transportation infrastructure. As we enter deeper into the world of autonomy, our lives will depend on the ability of the vehicles’ resilience against such cyber-attacks.

A critical and primary application of AI is providing secure connected autonomous vehicles (CAV). Sandip Ray, Ph.D., Endowed IoT Term Professor in the Department of Electrical & Computer Engineering at UF, in collaboration with his team of researchers led by Ph.D. student Srivalli Boddupalli, has developed a unique AI-based architecture that provides real-time resiliency to CAVs against arbitrary attacks on vehicular communications and sensor systems. “To our knowledge, this is the first resiliency architecture that can withstand such a wide spectrum of attacks and on arbitrary connected applications,” Dr. Ray said. “This architecture depends critically on an anomaly detection system we built based on deep learning. We have demonstrated this approach on multiple CAV applications.”

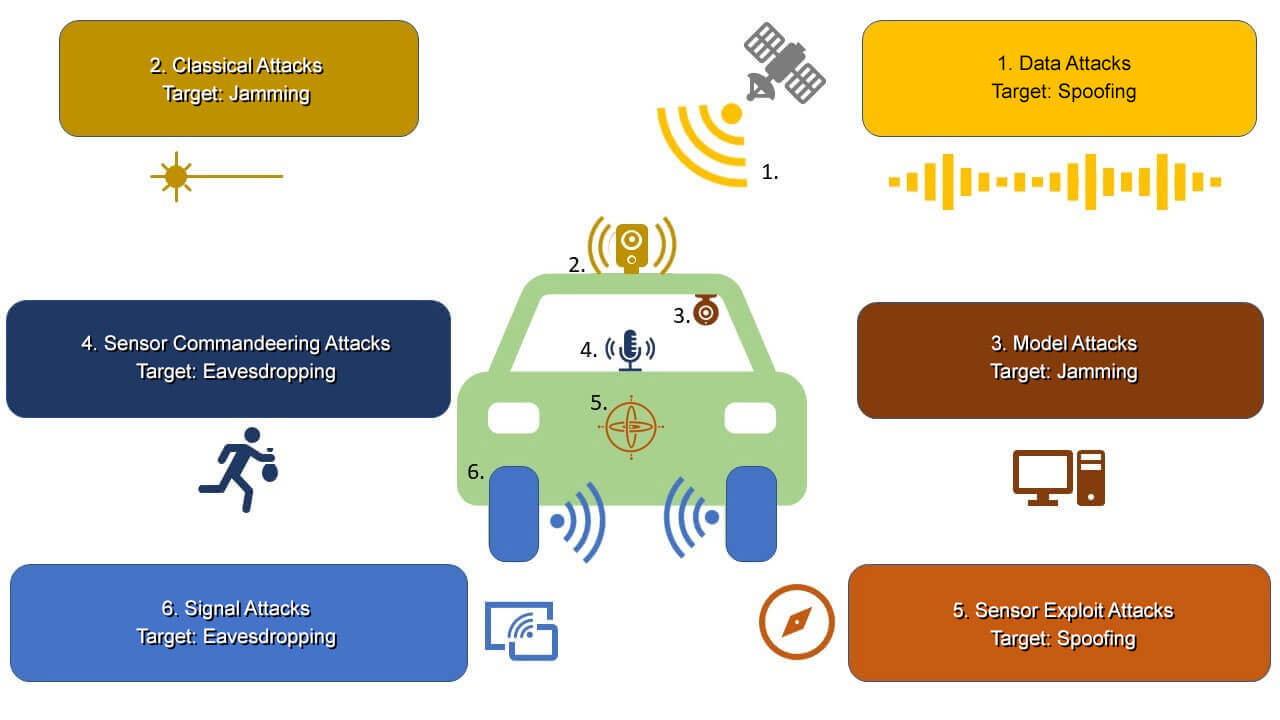

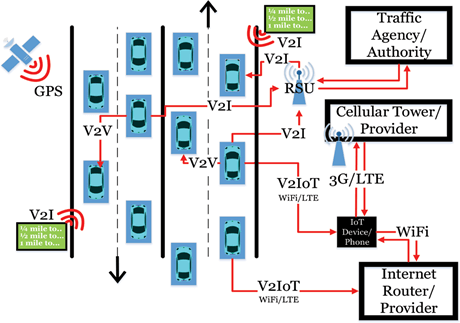

A key feature of next-generation autonomous vehicles is connectivity. An autonomous vehicle can “talk” to other vehicles as well as with the transportation infrastructure through sensors and inter-vehicular communications to ensure efficient mobility. Connected autonomous vehicular applications are already being aggressively designed, and include platooning, cooperative collision detection, cooperative on-ramp merging, etc. Connectivity, however, is also one of the most vulnerable components of autonomous vehicles and one of the crucial entry points for cyber-attacks. A key feature of such attacks is that they can be conducted without requiring an adversary to actually hack into the hardware/software or physical components of the target vehicle. These attacks can simply send misleading or even malformed communications to “confuse” the communication or sensor systems.

“To develop a successful safeguard against cyberattacks directed at connected vehicle applications, we have used advances in machine learning to create a unique, on-board predictor to detect, identify, and respond to malicious communications and sensory subversions,” Dr. Ray said. “A unique feature of the approach is that it can provide assured resiliency against a large class of adversaries, including unknown attacks. We have substantiated the approach on several CAV applications and developed an extensive experimental evaluation methodology for demonstrating such resiliency.”

Yier Jin: Application of AI in Internet Security

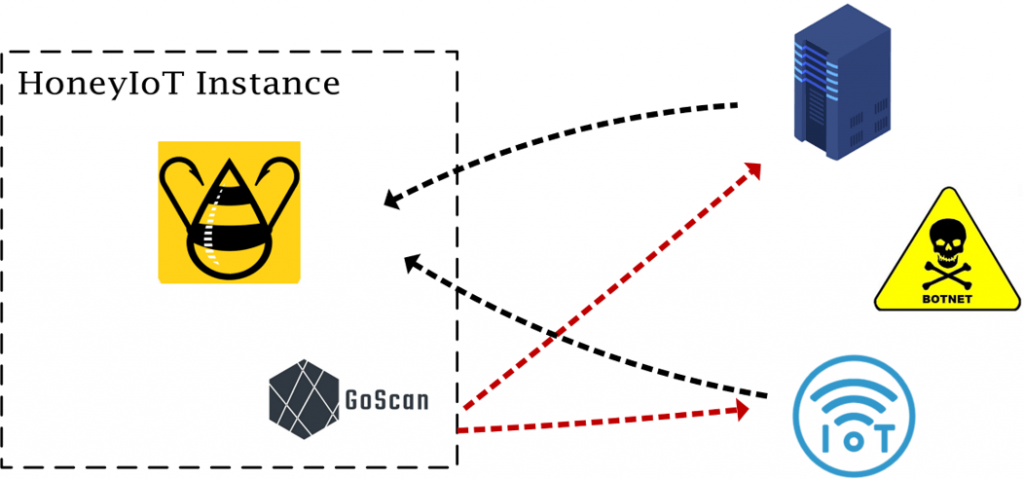

Supported by their sponsors, including the Air Force Research Laboratories, Yier Jin, Ph.D., Associate Professor and IoT Term Professor at the Nelms Institute, and his team have deployed a global honeypot network dedicated to Internet of Things security, named HoneyIoT. A honeypot refers to a computer security mechanism set to detect, deflect, or, in some manner, counteract attempts at unauthorized use of information systems. HoneyIoT uses IoT decoy devices that can monitor the Internet to predict attacks on IoT devices. Before the real attack happens, hackers usually perform some type of surveillance. Jin and his colleagues have installed monitors all across the globe that can intercept these pre-attack surveillances.

“Through the HoneyIoT infrastructure, we collect around 3.5GB of Internet traffic data, every day. It is not possible to efficiently review these files manually for signs of cyberattacks, so we rely on AI techniques to analyze the data,” Jin said.

AI is heavily leveraged to help process the data collected for various purposes, including Internet traffic classification, payload extraction and analysis, and attack identification and prediction. Jin explained, “Supported by AI techniques, we are able to perform IoT botnet detection based on historical data and real-time data. Various IoT botnet attacks have been detected through our HoneyIoT infrastructure with the support of AI techniques.” Many IoT devices are susceptible to cyberattacks because manufacturers have little time or resources to put complex cybersecurity measures into their products. When hackers send out malware or deceptive instructions to IoT devices during the surveillance stage of their planned attack, the data is captured by Dr. Jin’s decoy devices and sent to the cloud. Dr. Jin’s group has further developed data analysis tools supported by AI techniques to “train” the AI models and to detect anomalous behaviors. The collected data will be analyzed and the results will also be visualized to help identify the IoT botnet threats.

Swarup Bhunia: Application of AI for Efficient Image Compression

The emergent ecosystems of intelligent edge devices that allow data analysis close to the acquisition point in diverse IoT applications, from automatic surveillance to precision agriculture, increasingly rely on recording and processing a large volume of image data. Due to resource constraints (for example, energy and communication bandwidth requirements), these applications require compressing the recorded images before transmission.

Image compression in edge devices commonly requires three conditions:

- Maintaining features for coarse-grain pattern recognition (instead of the high-level details for human perception) used in machine-to-machine communications

- A high compression ratio that leads to improved energy and transmission efficiency

- A large dynamic range of compression and an easy trade-off between compression factor and quality of reconstruction to accommodate a wide diversity of IoT applications as well as their diverse energy/performance needs

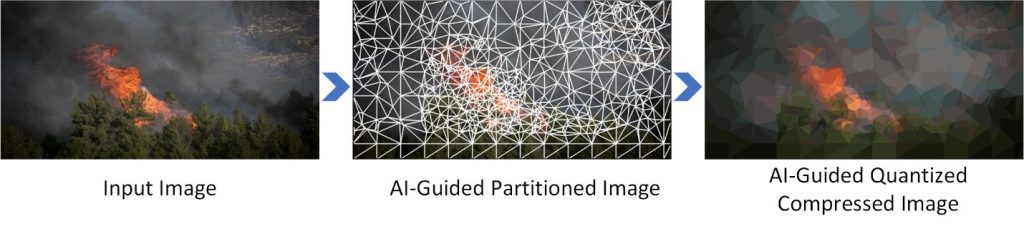

To address these requirements, Swarup Bhunia, Ph.D., Semmoto Endowed Professor of IoT and Director of UF’s Warren B. Nelms Institute for the Connected World, and his doctoral students, Prabuddha Chakraborty and Jonathan Cruz, are developing MAGIC, a novel machine learning (ML) guided image compression framework. Their framework judiciously sacrifices visual quality to achieve much higher compression when compared to traditional techniques while maintaining accuracy for coarse-grained vision tasks. The central idea is to capture application-specific domain knowledge and efficiently utilize it in achieving high compression. “We have demonstrated that the MAGIC framework is configurable across a wide range of compression/quality and is capable of compressing beyond the standard quality factor limits of both JPEG 2000 and WebP,” Dr. Bhunia said.

The research team is performing experiments on representative IoT applications using two open-source datasets, and they have shown over 40X higher compression at similar accuracy with respect to the source. Their technique highlights low variance in compression rate across images as compared to state-of-the-art compression techniques.